How AI Code Assistants transformed my Development Workflow

Adding AI to my workflow for experimenting, learning and getting more out of it.

As a full-stack developer working on a NestJS + React project, I’ve always sought ways to amplify my productivity without sacrificing quality.

Over the past six months, I decided to seriously integrate AI code assistants, including GitHub Copilot, Gemini Code Suggest, Windsurf, and Cursor, into my daily workflow.

What began as cautious experimentation turned into a radical revamp of how I build, debug, and even conceptualize software.

In this technical post, I’ll share my data-backed results, personal reflections, a few honest failures, and advice for other developers thinking about leveraging AI tools.

My Setup and Measurement Approach

Here what I have setup as my project stack

Backend - NestJS with TypeScript

Frontend - React with TypeScript and Webpack

Code assistants - Github Copilot, Gemini Code Suggest, Windsurf and Cursor with their Pro plans

I tracked my progress by logging hours spent per feature, lines of code written, number of manual searches per problem, and bug counts per release.

Each month, I alternated my primary code assistant tool to draw direct comparisons and reviewed code quality via PRs with both AI-generated and hand-written code.

Success metrics

Development Time per Feature (hours)

Number of Context Switches (e.g., Googling, docs)

Bugs per 1000 lines of code

Self-assessed Skill Improvement (weekly reviews)

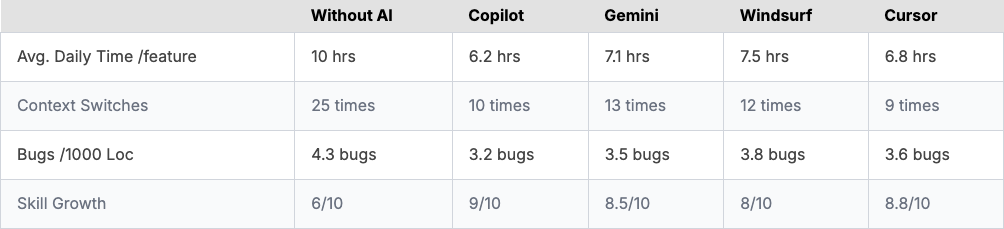

With and Without AI

Here is the findings for seven features built per stack and tracked over time

How AI is Helping

I think we can divide the whole experience into three major groups

Time saved

Figuring out syntaxes

Quality and Learning

1. Massive time savings

Copilot and Cursor led the pack, consistently suggesting boilerplate (think: NestJS controllers, DTOs, service patterns) before I fully finished typing a comment or function name. For standard REST API scaffolding, these tools frequently cut hours into minutes.

For example, initial user auth setup in NodeJS using JWT took almost 12 hours without the Copilot and just 6.5 hours using Copilot, that too with Unit and Integration tests, refactoring based on Copilot suggestions.

2. Less Googling, more work done

My usual workflow required context switching, jumping to search for exceptions, TS types, or React lifecycle gotchas or mere syntaxes.

Copilot delivered ~70% of these answers inline. Windsurf and Cursor were nearly as good, with Gemini a bit slower, requiring more prompt tweaking.

3. Quality and Learning

While AI suggestions weren’t always perfect, reviewing Copilot’s code against my own forced me to revisit best practices. Several times, Copilot suggested modern TypeScript features or React hooks I hadn’t fully explored. Over time, my code reviews became more insightful, and my ability to manually debug or optimize increased.

Though I still feel that if your questions are not formed as it should be, the AI generated code would never feel good. So invest some time in learning prompts and how to phrase your questions for AI.

The Friction and Failures

Looking at the result you may think that its all super cool and easy but trust me it is not, it has its own fair share of frictions and failures, I had to do a lot of hit-and-trial to get there.

Overconfident Suggestions

Copilot and Gemini occasionally hallucinated imports or missed business logic nuances (especially with custom NestJS decorators). If I wasn’t vigilant, these made it into the codebase. Cursor proved more conservative and accurate on “edge” NestJS/React patterns.

Testing Shortcuts

AI-generated test cases sometimes mocked too broadly or skipped side effects, inflating test coverage with shallow assertions.

Learning curve

Windsurf’s interface didn’t quite fit my workflow, requiring extra setup for effective multi-file context.

How to approach

If you planning to invest time and experiment, I would say go ahead and here are some suggestions which I have adopted to improve

Draft with AI - Let Copilot (or alternate) generate code while I outline intent via comments and pseudo-code.

Pause and Compare - Manually review all AI output, comparing with what I would have hand-written.

Refactor for Clarity - Merge the best of both, rewrite unclear or over engineered AI code.

Test and Analyze - Run, test, and note which bugs AI helped prevent, which it introduced.

Reflect Weekly - Every Friday, journal one concept learned via AI and one area where careful manual work still proved essential.

These few measures helped me improve my workflow which may or may not work exactly the same for you, but still its worth trying. I would also recommend to pay more attention to the followings as you go along with it

Always review, never blindly trust. AI helps, but you own your code.

Experiment with tools - Copilot and Cursor are more mature for NestJS/React, Gemini shines when combining code with docs. Windsurf fits best for multi-language or heavy context projects.

Use AI to learn - Treat suggestions as learning opportunities—ask why an algorithm or syntax is used.

Pair AI with human collaboration - Share AI discoveries with teammates; you’ll uncover patterns faster together.

Conclusion so far

Using AI code assistants, especially Copilot and Cursor, not only slashed my dev time but also widened my technical skills and focus. By measuring not just speed but also context-switch reduction, bug rates, and my own skill growth, I can confidently say that these tools are now essential partners in my workflow.

Don’t fear AI, pair with it, learn from it, and let your engineering mindset lead the way to both greater efficiency and smarter development.

For me the experiment is still going on and I’ll be sharing more insights and ways to improve as I progress further.

So keep an eye here and do subscribe to be in the loop.

Have you tried Copilot or any other code assistant? Share your thoughts, insights and tips in the comment.